Will AI-Generated Misinformation Impact the Results of the 2024 Presidential Election?

Editor's Note: This discussion on AI originally published on Divided We Fall and features perspectives from UC Berkeley Professor Hany Farid and R Street Institute Fellow Chris McIsaac. It has been republished with permission from Divided We Fall. Photo by Muha Ajjan on Unsplash

Poison in the Electoral Waters

By Hany Farid – Professor of Computer Science, UC Berkeley

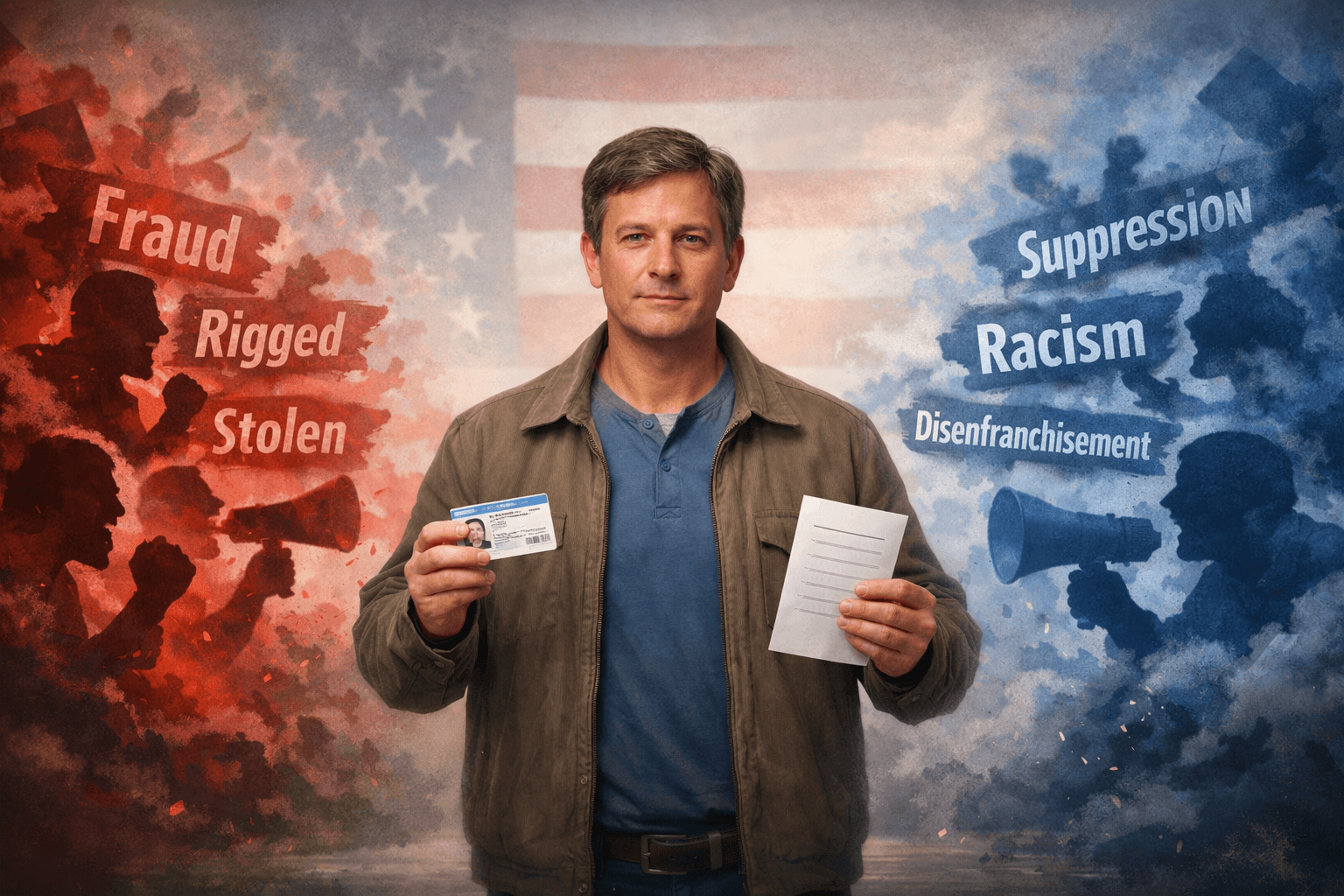

AI-generated misinformation will definitely impact the 2024 election, but we don’t know how significant the effect will be. AI-generated misinformation (e.g. deepfakes) includes machine-generated, human-like prose, image, audio, or video. This content manifests itself as Twitter/X bots pushing state-sponsored disinformation, fake photos of former President Trump surrounded by adoring Black voters, AI-generated robocalls in the voice of President Biden urging voters not to vote, or a bogus video of Biden misspeaking.

Generative AI has democratized access to sophisticated tools to create new content with unprecedented ease and realism. At the same time, social media has increased access to publishing content at an unprecedented scale and speed, as well as having unleashed algorithms that amplify polarizing speech. Furthermore, our society is deeply divided and untrusting of others, the media, and the government. These ingredients–not just AI–are creating a dangerous environment that threatens our social fabric and democracy.

Lessons from Abroad

We may have already seen an example of AI-generated misinformation impacting national politics. In October 2023, Slovakia saw a dramatic, last-minute shift in its presidential election. Just 48 hours before election day, the pro-NATO candidate Michal Šimečka was leading in the polls by four points. A fake audio of Šimečka claiming that he was going to rig the election spread quickly online, and two days later, the pro-Moscow candidate Robert Fico won the presidential election by five points. It is not possible to say exactly how much the fake audio led to this nine-point swing, but this incident should serve as a cautionary tale.

We should not, however, focus exclusively on AI-powered misinformation. Over the past few months, for example, social and traditional media were awash in deceptive videos designed to emphasize stereotypes about President Biden’s age and mental state. Using nothing more sophisticated than cropping an image or trimming a video to remove context, these cheap fake videos didn’t need AI to be deceptive.

Subtle Manipulation with a Big Impact

Adding to the dangers, the difference in the last two U.S. national elections was measured in just tens of thousands of votes in key battleground states. Misinformation doesn’t have to sway millions of votes to impact an election. On account of the electoral college, moving relatively few votes in swing districts is enough to alter the outcome of the entire election.

Perhaps the more insidious side-effect of deepfakes and cheap fakes is that they poison the entire information ecosystem. In this regard, the mere existence of deepfake technologies can influence election outcomes by casting doubt on any visual evidence.

Even AI agrees to the question at hand. When asked whether AI will impact the election, ChatGPT responded: “AI-generated misinformation has the potential to significantly impact the 2024 election, as it can be used to spread false information quickly and broadly. The overall impact will depend on the effectiveness of both the misinformation campaigns and the countermeasures implemented by platforms, governments, and civil society.”

Americans are Prepared for AI-Generated Misinformation

By Chris McIsaac – Fellow, R Street Institute

AI-generated misinformation will impact the 2024 election, but the impacts will be less significant than feared. AI and its potential to fuel disruptive election misinformation has been a hot topic in the media and among government officials in the lead up to the 2024 election cycle. Over the past year, real-life examples of AI-generated deepfakes of Joe Biden and Donald Trump contributed to concerns that AI was leading us into a post-truth world and drove policy responses from lawmakers seeking to regulate deceptive use of the technology.

However, 60 percent of states have completed their primary elections this year and, so far, there have been no meaningful AI-driven disruptions. That does not mean we are out of the woods for November. However, elevated public awareness around the threat, extensive preparations by local election officials, and advances in detection technology suggest that the actual impacts will be far less significant than originally feared.

Credit to the People

One critical defense against misinformation is an informed public and polling suggests that Americans are well aware of the potential risks of AI-generated misinformation. For example, an October AP poll found nearly 60 percent of Americans expect AI to increase the spread of election misinformation. More recently, an April survey from Elon University indicates over three-quarters of the population anticipates deceptive uses of AI will impact the outcome of the presidential election.

These statistics are concerning, but they indicate a strong public understanding of the risks. Voters have taken a defensive posture when consuming information, which is a good thing, as healthy public skepticism can function as a bulwark against deception.

Preparation Can Reduce Risks

At the same time, state and local election officials are taking important steps to mitigate the effects of AI-generated misinformation by conducting planning exercises for election administrators to practice responding to various adverse events, including scenarios related to deepfakes and misinformation. Anticipating these situations and formulating responses in advance can help prevent minor incidents from accelerating into major disruptions to the election process.

Additionally, election officials are engaged in proactive public communications to solidify their status as the go-to source of trusted information about voting times, locations, and procedures. Building this trust in advance will give voters confidence about where to turn if they encounter suspicious information about a closed polling location or a change to the election schedule.

Finally, technology that identifies deepfakes is getting better every day. While current versions of AI detection tools are not perfect, market competition will provide a strong financial incentive to develop effective tools that will ultimately benefit voters and the entire information environment.

Overall, AI follows in the footsteps of past communication technologies that were poised to disrupt American elections–including radio, television, computer, the internet, and photo- or video-editing software. Yet, our democracy persists. And while AI-generated misinformation will certainly impact the 2024 election to a minor extent, the intelligence and ingenuity of Americans will prevail over time.

Intelligent and Ingenious, but Not Immune

By Hany Farid – Professor of Computer Science, UC Berkeley

I agree with Mr. McIsaac that a well-informed public and a well-prepared government are necessary to help us defend against all forms of mis- and disinformation. However, I disagree that the impact of mis- and disinformation–including AI-powered disinformation–have not had a meaningful impact to date. There has been a significant impact, just not in the form of a single, major event. Instead, it has come in the form of a million cuts that have slowly but surely eroded the public’s trust in the government, media, and experts, and a growing–and at times violent–distrust and hatred toward a significant number of our fellow Americans.

Gaps in Our Well-Informed Public Are Well-Documented

While I appreciate Mr. McIsaac’s optimism about the “resilience of our democracy and the intelligence and ingenuity of Americans,” I want to remind him of how misinformation has already influenced people.

The violent insurrection of January 6, 2021, fueled by Donald Trump’s Big Lie regarding a stolen election and amplified by both social and traditional media, left five people dead and hundreds injured. Subsequent calls by then-President Trump for the execution of his Vice President, shook our democracy to its core. Even today, two-thirds of Republican voters (and 30 percent of Americans) believe that Trump won the 2020 election despite a lack of compelling evidence.

In late 2020, in the middle of the global COVID-19 pandemic, 22 percent of Americans believed that Bill Gates planned to use COVID-19 to implement a mandatory vaccine program with microchips to track people. This number, not surprisingly, tracks with the number of unvaccinated Americans and has propelled a larger distrust of all vaccines with the predictable detrimental effect on public health.

And today, as the planet continues to see record-breaking temperatures and devastating, unprecedented natural disasters, 15 percent of Americans believe that global climate change is not real and distrust the overwhelming scientific consensus to the contrary.

A Pre-existing Divide Now Supercharged by AI-Generated Misinformation

Roughly one in five Americans are utterly confused by basic facts of our most recent election, our public health, and our climate’s health. All this happened without the full power of generative AI and deepfakes.

Based on where we are today, I am less confident in the intelligence and ingenuity of the American public at a time when too many Americans are being manipulated and lied to by all forms of mis- and disinformation in both social and traditional media outlets.

The internet promised to democratize access to knowledge and make the world more open and understanding. The reality of today’s internet, however, is far from this ideal. Disinformation, lies, conspiracies, hate, and intolerance dominate our online and offline worlds. I contend that we as a society and democracy cannot move forward if we don’t share a common factual system. If the trends of the past decade continue and are supercharged by AI, our future is fragile and uncertain at best.

Excessive Regulations Are More Concerning than AI-Generated Misinformation

By Chris McIsaac – Fellow, R Street Institute

Mr. Farid and I agree that AI-generated misinformation will impact the 2024 election and that a well-informed public and well-prepared government can help defend against the harmful effects. We disagree on the public’s ability to effectively navigate the deteriorating information ecosystem, with Mr. Farid laying out a case for why Americans are ill-equipped to deal with the accelerating onslaught of misinformation. This leads him to conclude that if current trends continue, we are on a path to a “fragile and uncertain” future.

Potential for Harm Extends Beyond Misinformation

I, too, have concerns about the information environment and its impact on society moving forward. However, my concern is less about the misinformation itself and more about the potential harms of government responses that seek to regulate it through restrictions on free speech.

For example, lawmakers in Washington, D.C. and state capitals across the nation have jumped at the chance to propose new laws and regulations attempting to “fix” the problem of AI-generated misinformation in elections through various restrictions on political speech. 18 states now have laws that would either ban or require labeling of deceptive AI-generated political speech with most of those laws approved in the last two years.

Similarly, federal lawmakers and agencies are getting in on the act with the Federal Communications Commission, Federal Elections Commission, and members of Congress proposing new laws and regulations related to the use of AI in elections. Proponents of these laws tout their importance for protecting the public from deception, but in practice, they are speech restrictions that could violate the First Amendment.

Setting aside the constitutionality of these proposals, if allowed to go into effect, they will be harmful and counterproductive. Trust in major American institutions including government and media has been falling for decades, and this decline has contributed to an environment where misinformation can flourish. Restricting speech will only exacerbate this distrust, empower those who spread lies, and truly set the stage for a fragile and uncertain future.

Working Toward a Different Future

With that said, there are opportunities for agreement on specific common-sense steps that can be taken to improve the information environment while protecting free speech:

1. Voters should remain skeptical of information consumed online, consult multiple sources to verify information, resist emotional manipulation, and take personal responsibility for not spreading false information.

2. State and local election officials should build trust with the public and practice responding to AI-driven disruptions before Election Day.

3. Media, civil society organizations, and private-sector technology companies should support voter education and drive public awareness campaigns about AI disinformation risks.

Overall, these actions can help mitigate the impacts of AI-generated misinformation in the 2024 election and beyond. While AI and misinformation are here to stay, cooperation among election stakeholders and persistent efforts by institutions to rebuild trust with the public can set the stage for a stronger future with more civil and truthful public dialogue.

About The Authors

Hany Farid is a Professor at the University of California, Berkeley with a joint appointment in Electrical Engineering & Computer Sciences and the School of Information. He is also the Chief Science Officer at GetReal Labs. His research focuses on digital forensics, forensic science, misinformation, image analysis, and human perception.

Chris McIsaac is a Fellow at the R Street Institute where he prepares research and analysis on electoral reform, AI and elections, and budget policy. Chris previously served as a pension policy consultant with The Pew Charitable Trusts where he provided technical assistance to state and local governments seeking to improve pension sustainability. Prior to that, he was an advisor in the Arizona Governor’s Office on issues related to taxes, pensions and energy policy. Chris holds bachelor's degrees in business administration and economics from Boston University.